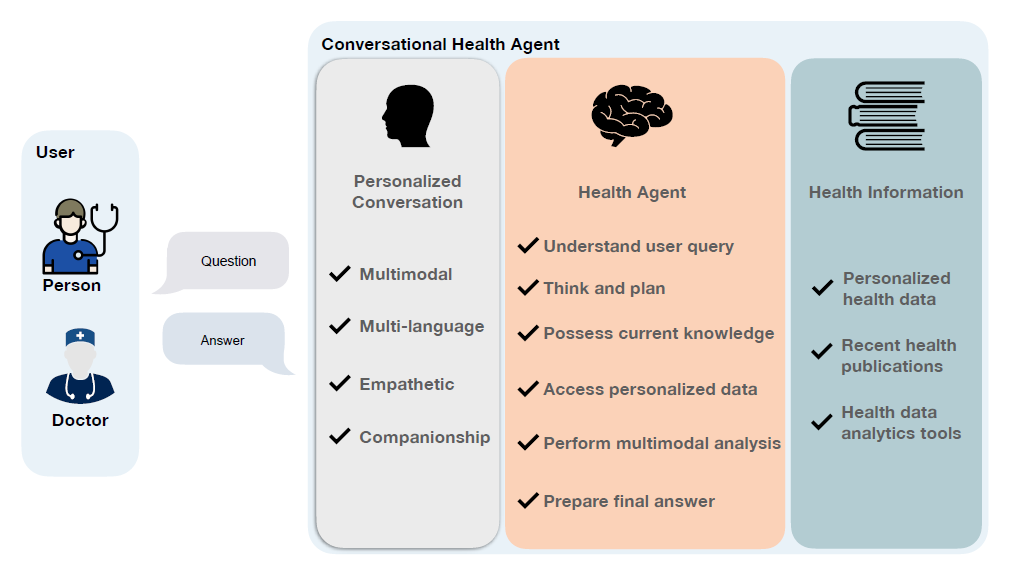

Conversational Health Agents (CHAs) are interactive systems

that provide healthcare services, such as assistance and diagnosis. Current CHAs, especially those utilizing Large Language Models (LLMs), primarily focus on conversation aspects. However, they offer limited agent capabilities, specifically needing more multi-step problem-solving, personalized conversations, and multimodal data analysis. We aim to overcome these limitations.

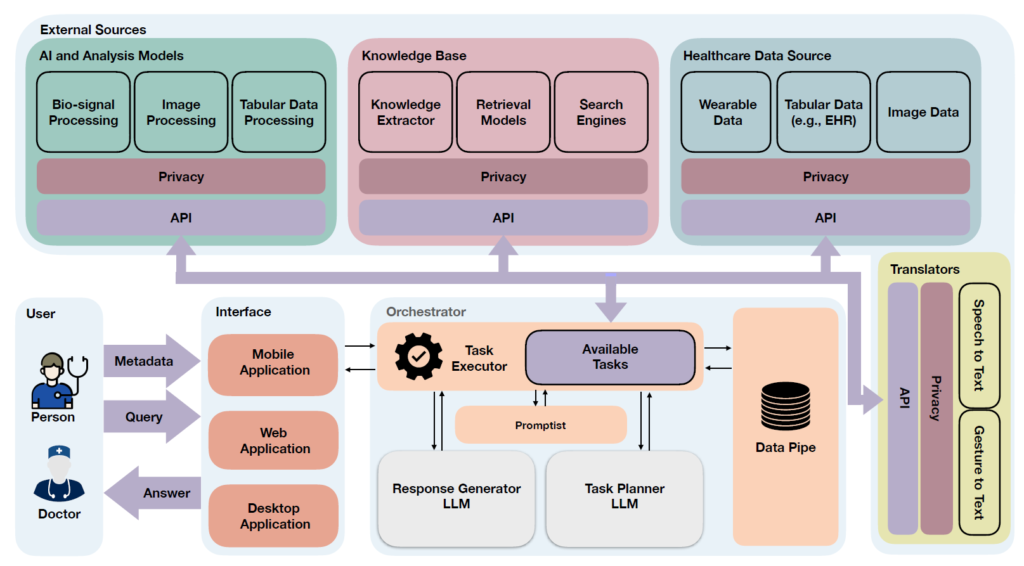

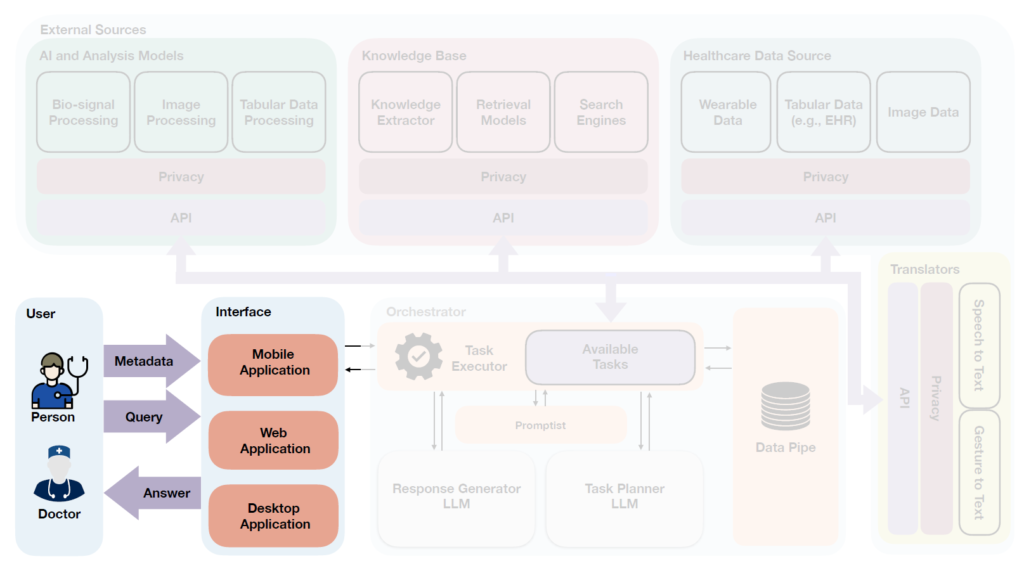

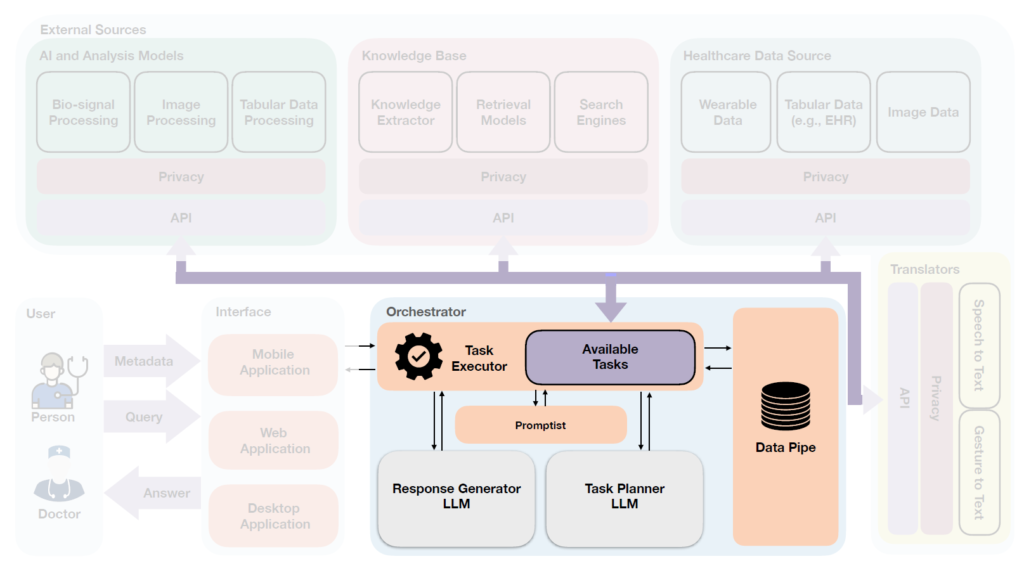

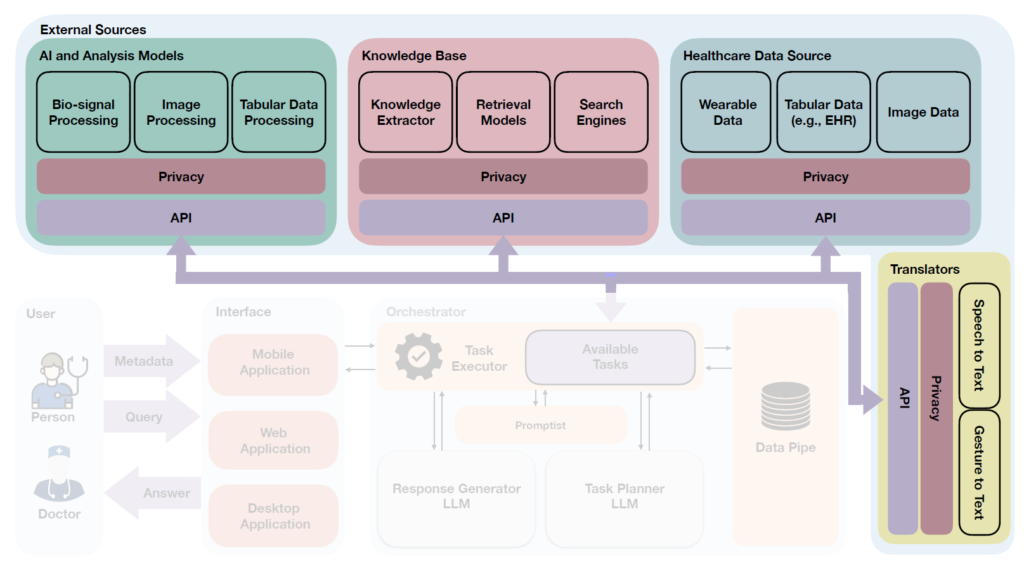

We design an LLM-powered framework with a central agent that perceives and analyzes user queries, provides appropriate responses, and manages access to external resources through Application Program Interfaces (APIs) or function calls. The user-framework interaction is bidirectional, ensuring a conversational tone for ongoing and follow-up conversations.

The framework, including three major components.

Interface acts as a bridge between the users and agents, including interactive tools accessible through mobile, desktop,or web applications. It integrates multimodal communication channels, such as text and audio. The Interface receives user’s queries and subsequently transmits them to the Orchestrator.

Within this framework, users can provide metadata (alongside their queries), including images, audio, gestures, and more. For instance, a user could capture an image of their meal and inquire about its nutritional values or calorie content, with the image serving as metadata.

The Orchestrator is responsible for problem solving and decision making to provide an appropriate response based on the user query. It incorporates the concept of the Perceptual Cycle Model in CHAs, allowing it to perceive, transform, and analyze the world (i.e., input query and metadata) to generate appropriate responses. To this end, the input data are aggregated, transformed into structured data, and then analyzed to plan and execute actions. Through this process, the Orchestrator interacts with external sources to acquire the required information, perform data integration and analysis, and extract insights, among other functions.

External Sources play a pivotal role in obtaining essential information from the broader world. Typically, these External Sources furnish application program interfaces (APIs) that the Orchestrator can use to retrieve required data, process them using AI or analysis tools, and extract meaningful health information. In openCHA, we integrate with four primary external sources, which we found critical for CHAs.

Latest Videos

Latest Posts & News